Network Transport in Conversational AI: The Unsung Hero of Voice Interactions

In the world of Conversational AI, much attention is paid to large language models (LLMs), speech recognition, and text-to-speech synthesis. But beneath the surface lies a critical yet often overlooked component: network transport.

Whether you're building a smart assistant, a customer support bot, or an interactive voice-based healthcare application, your system’s responsiveness and reliability hinge on how audio and data traverse the network.

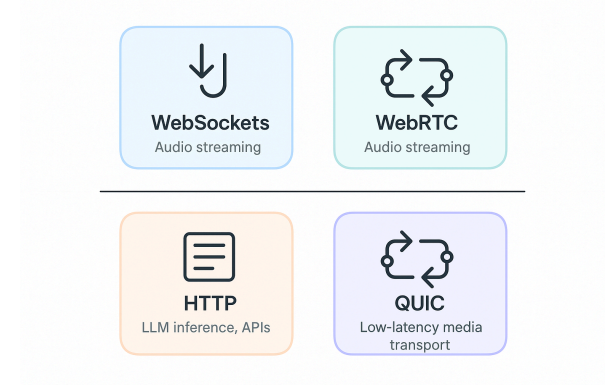

This post dives deep into the various protocols and architectural patterns used in voice AI systems, focusing on WebSockets, WebRTC, HTTP, QUIC, and network routing — and why the right choices here can make or break the user experience.

🔌 WebSockets vs WebRTC: Choosing the Right Pipe for Audio

WebSockets: Good Enough for Prototypes, Not Real-Time Voice

WebSockets offer a persistent, full-duplex communication channel over TCP. They are easy to implement and widely supported, making them a go-to choice for developers experimenting with voice AI.

- TCP & Head-of-Line Blocking: A single delayed packet can block all subsequent packets — a killer for real-time audio.

- Latency Accumulation: Without packet dropping or jitter handling, delays stack up quickly.

- Poor Media Observability: No native tools for monitoring audio quality.

- Complex Reconnection Logic: Requires significant custom logic for resilience.

Key Insight

While LLMs and speech synthesis often get the spotlight, real-time voice AI depends critically on network transport — from WebRTC to QUIC. This blog dives into protocols, latency strategies, and why WebRTC still dominates production pipelines.

WebRTC: Designed for Real-Time Media

- Uses UDP: Allows out-of-order delivery, supports low-latency audio.

- Opus Codec Integration: Enables error correction, bandwidth estimation, and dynamic pacing.

- Built-in Timestamps: Simplifies playout and interruption handling.

- Robust Enhancements: Includes echo cancellation, noise suppression, gain control.

- Telemetry: Real-time stats allow precision media tuning.

✅ Use WebRTC if latency and audio quality matter — ideal for browsers and mobile voice AI clients.

🌐 HTTP: The Workhorse for Text & Control

While HTTP isn't ideal for audio streaming, it is crucial in other voice AI tasks:

- Text-based Inference: Send transcripts via REST to LLMs.

- Service Integration: HTTP connects to CRMs, EMRs, etc.

- Function Proxying: Calls offloaded to HTTP endpoints decouple core logic.

Limitations:

- Latency from TLS handshake adds 100ms+

- No support for media streaming

- Inefficient binary handling (base64 bloat)

Conclusion: HTTP is essential — just not for low-latency audio.

Core Components

- WebSockets vs WebRTC Compare WebSockets (great for prototypes) vs WebRTC (purpose-built for real-time audio) in terms of latency, reliability, and media quality.

- HTTP and QUIC in Voice AI While HTTP powers inference and APIs, QUIC brings the next generation of low-latency transport, fusing speed and encryption.

- Network Routing and Latency Routing through edge servers and private backbones is crucial to reduce jitter and ensure consistent voice interactions globally.

- Latency Benchmarks Understand RTT differences across geographies and how they affect 1-second latency budgets in conversational flows.

- Protocol Use Cases Learn when to use WebRTC, WebSockets, HTTP, or QUIC depending on your voice AI architecture goals.

🚀 QUIC: The Next-Gen Transport for Real-Time AI

QUIC (Quick UDP Internet Connections), developed by Google and powering HTTP/3, merges TCP features with UDP speed:

- Multiplexed Streams (no Head-of-Line Blocking)

- Fast connection setup

- Built-in encryption

- Bidirectional communication

⚠ Caveat: Browser support varies (e.g., Safari lacks full WebTransport). But standards like Media over QUIC (MoQ) are progressing fast.

🌍 Network Routing: Proximity Matters More Than You Think

Even with the best stack, long-haul connections hurt latency:

- UK ↔ California RTT ≈ 140ms

- UK ↔ London RTT ≈ 15ms

That’s a 10x difference — critical for sub-second voice interaction.

- Edge Servers: Deploy close to users (like CDNs).

- Private Backbones: Avoid public internet congestion.

- Jitter Buffers: Compensate for delayed packets but add lag.

Implementation Roadmap

- 1Use WebRTC for real-time voice transmission with low jitter and echo control

- 2Leverage HTTP for LLM requests and external API calls

- 3Experiment with QUIC for future-proofing your real-time media stack

- 4Deploy edge servers to reduce RTT and improve audio responsiveness

- 5Design routing-aware architectures to ensure consistent performance across regions

🧭 Summary: Which Protocol When?

🗺 Architecting for the Real World

Modern conversational apps mix multiple protocols:

- WebRTC for real-time audio

- HTTP for LLMs and services

- QUIC as a future-ready solution

- Use edge and private networks to ensure stability

🛠 Final Thought

It’s tempting to focus on the “intelligence” in Conversational AI. But speed, reliability, and transport intelligence matter just as much. Network transport is the hidden backbone of great voice experiences.

Ready to Transform Your Customer Service?

Discover how Zoice's conversation intelligence platform can help you enhance CLV and build lasting customer loyalty.