Under the Hood: The Building Blocks of Modern Conversational AI

From the smart speakers in our homes to the chatbots guiding us through customer service, conversational Artificial Intelligence (AI) has seamlessly woven itself into the fabric of our daily lives. These systems understand, process, and respond to human language in ways that feel increasingly natural. But what makes this possible? Let’s dive into the architecture powering modern conversational systems.

Key Insight

From ASR to LLMs, this blog decodes how voice assistants and chatbots are able to understand, reason, and respond with human-like precision — unlocking the true potential of conversational AI.

The Conceptual Architecture: A High-Level Blueprint

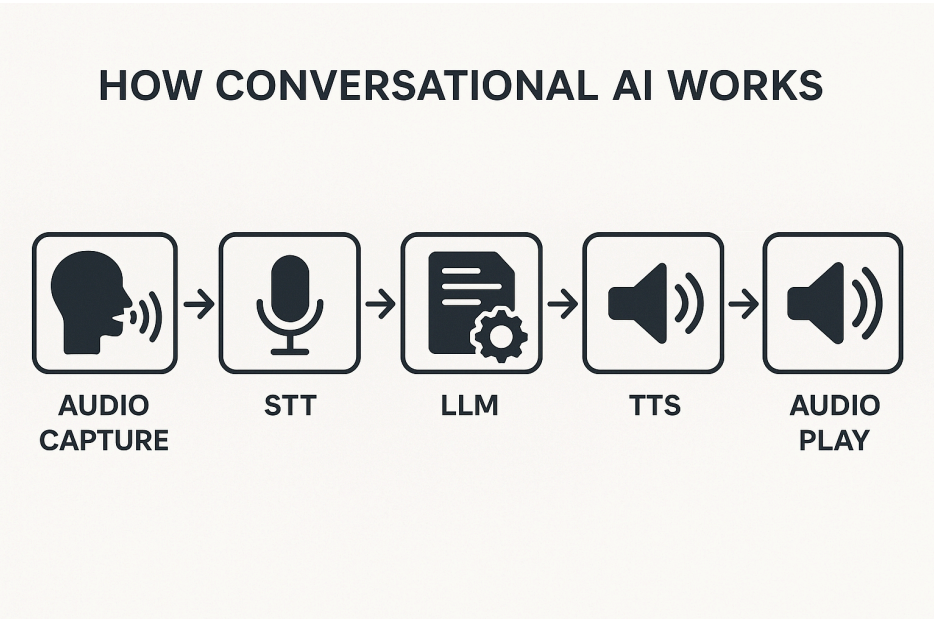

Conversational AI generally follows this flow:

- Input: The user speaks or types a message

- Processing: The AI interprets the input using NLP

- Response Generation: The system formulates a relevant reply

- Output: The reply is delivered as text or speech

Each stage involves complex components and models working together in harmony.

Core Components

- Automatic Speech Recognition (ASR) Transforms human speech into machine-readable text using deep learning models like CNNs, RNNs, and CTC loss.

- Natural Language Understanding (NLU) Extracts user intent and entities from input text using techniques like BERT and word embeddings.

- Dialogue Management (DM) Maintains context and determines appropriate system responses using rules, statistical models, or neural networks.

- Natural Language Generation (NLG) Constructs grammatically correct and meaningful replies based on system decisions.

- Text-to-Speech (TTS) Generates natural, expressive human-like speech from text using models like Tacotron and WaveNet.

- Large Language Models (LLMs) Massive transformer-based models that unify NLU, DM, and NLG capabilities for end-to-end conversational AI.

The Sensory Layer: Input and Output

Automatic Speech Recognition (ASR)

ASR converts spoken audio to text. It uses an acoustic model to identify phonemes from sound waves and a language model to predict word sequences. Deep learning techniques like CNNs, RNNs (e.g., LSTMs, GRUs), and CTC loss are crucial to this process.

Text-to-Speech (TTS)

To convert text responses back into speech, modern systems use neural TTS like Tacotron and WaveNet for realistic, expressive voice synthesis.

Implementation Roadmap

- 1Capture input via voice or text

- 2Use ASR (if voice) to transcribe to text

- 3Use NLP to understand intent, sentiment, and extract entities

- 4Maintain dialogue context and determine next action

- 5Generate coherent and human-like response

- 6Convert response to speech using TTS (if needed)

The Core Brain: Natural Language Processing (NLP)

Natural Language Understanding (NLU) enables intent recognition, entity extraction, and sentiment analysis. Techniques include word embeddings and transformer models like BERT.

Dialogue Management (DM) handles context tracking and flow. It may use rules, probabilistic models (e.g., POMDPs), or neural networks to maintain conversation logic.

Natural Language Generation (NLG) turns structured system data into natural, fluent sentences using steps like content planning and text realization.

The Game Changer: Large Language Models (LLMs)

LLMs like GPT revolutionize conversational AI by combining NLU, DM, and NLG in a single model. Trained on massive datasets, they deliver highly contextual and human-like conversations.

Putting It All Together

Example flow with LLM-powered assistant:

- User: “What’s the weather in London tomorrow?”

- ASR: Transcribes the question.

- LLM: Understands intent (get_weather), extracts entities (London, tomorrow), queries API.

- LLM: Generates response: “Partly cloudy with a high of 18°C.”

- TTS: Converts the text to natural speech.

The Future is Multimodal and Emotionally Aware

Next-gen conversational AIs will process not just text and voice, but also images and videos. Imagine showing a picture to your assistant and getting a descriptive answer. Emotional intelligence will also become key — enabling AIs to detect and respond empathetically to human emotions.

Conclusion

Modern conversational AI is built on a complex stack of cutting-edge technologies — from audio processing to neural language models. As these systems grow more advanced, our interactions with machines will become increasingly seamless and human-like. The AI revolution is already underway — and it's talking.

Ready to Transform Your Customer Service?

Discover how Zoice's conversation intelligence platform can help you enhance CLV and build lasting customer loyalty.